7 Recommendations for Current and Potential OpenAI Customers

Continuity risk must be balanced with the feature superiority of OpenAI ChatGPT, and companies need to design for and test LLM switchability

Written by AI Analysts at GAI Insights (Adam, Amanda, John, Julio, Tania, and Tim)

This issue is jam-packed with value

7 recommendations for companies using or considering OpenAI LLM offerings

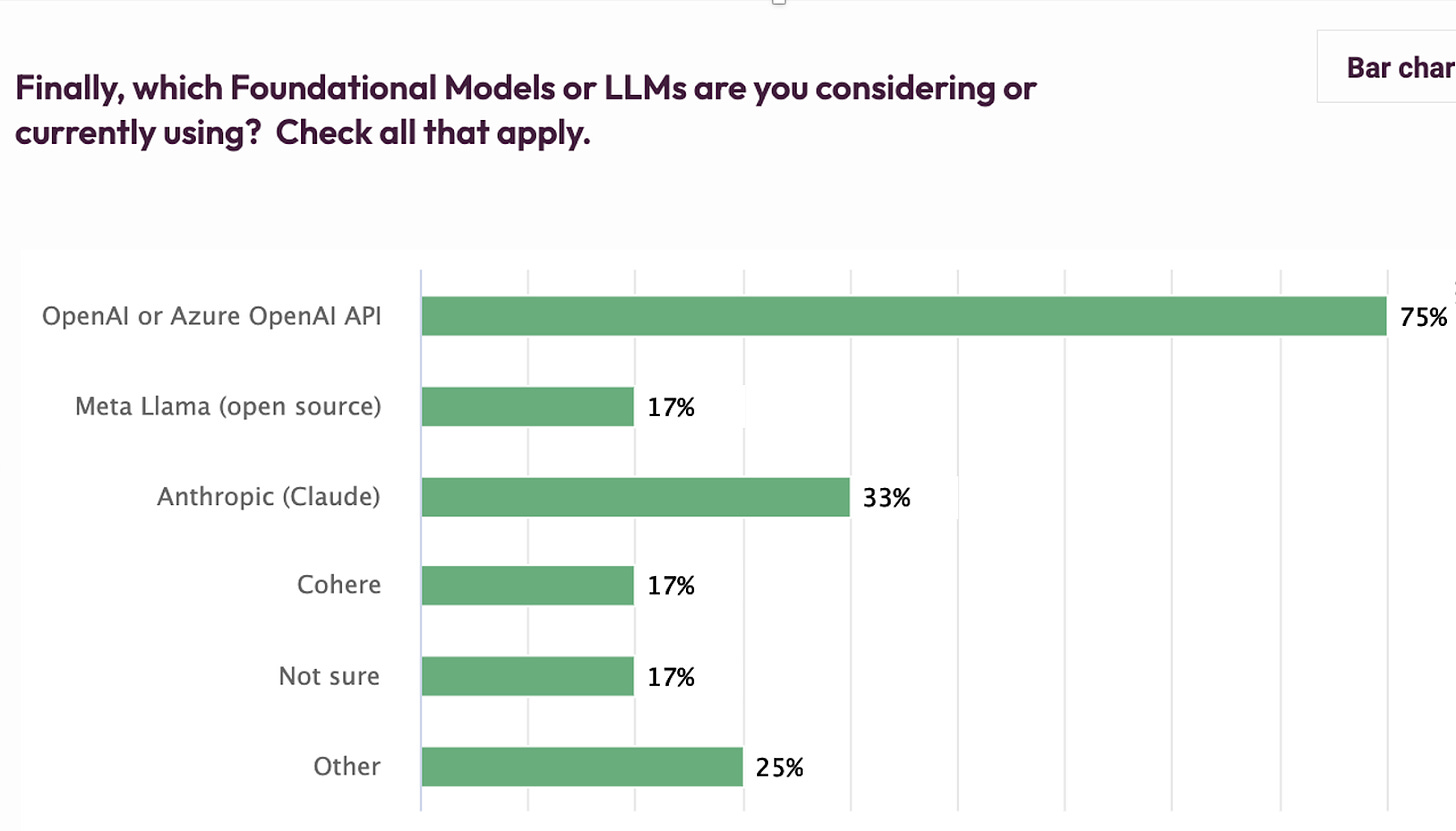

Early results of our LLM Adoption Survey (what top LLM models are being considered. Can you the guess #2 and #3 LLM models?)

3 essential GenAI news items from last week (and why) - stay current in minutes

Join our free webinar about 12 issues to consider when deciding between open source and proprietary LLMs on Dec 1 at noon EST

7 Recommendations for OpenAI Customers and Prospects

The recent management disruption at OpenAI was material, and we don’t have confidence that the situation has fully stabilized.

Here is some of our thinking that informed our recommendations:

Based on interviews with dozens of companies and developers, the GAI Insights community, and our own use, the capabilities of OpenAI ChatGPT are 4-6 months ahead of all other LLM models

Microsoft will do whatever is necessary to ensure reliability for enterprise customers using OpenAI through Azure

Open source is a capability and not a solution. Total cost of ownership, risk mitigation, and LLM-fit-to-use-case are key factors in deciding among models

Changing LLM models is not a straightforward 1:1 swap, as outputs for your specific use case will vary by model and choosing the best model is not straightforward. One needs to test the same prompts with different LLMs to understand differences

Model interoperability can be designed in but is not free (e.g. tools like Langchain lower the cost of changing models but development and testing are still required)

The Big-3 cloud providers (Microsoft, AWS, and Google) are and will remain the dominant suppliers of LLM solutions because of their ability to more easily fit a company’s existing security framework and because they are rapidly developing supporting tools and capabilities. All three support proprietary and open source LLMs.

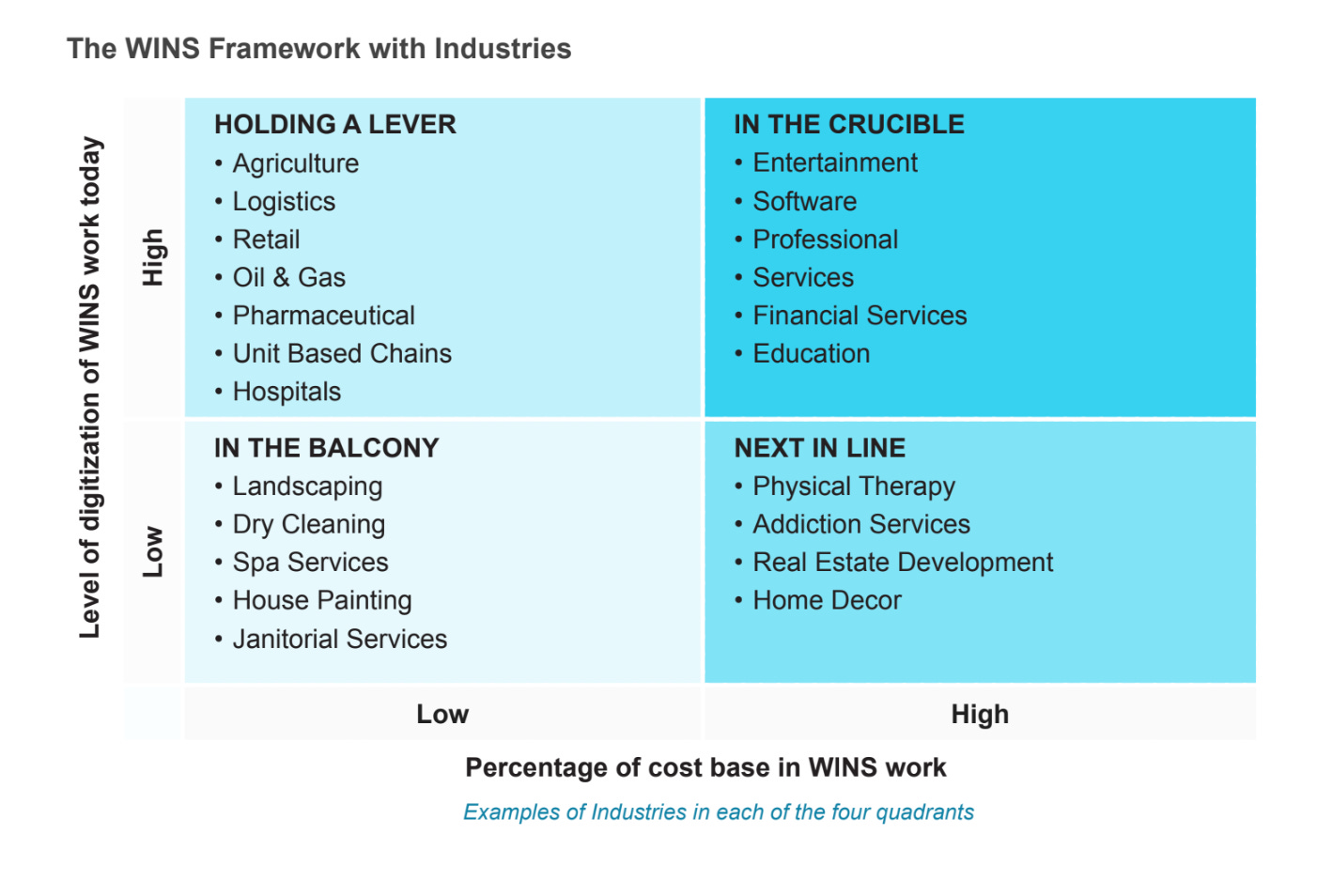

Our recommendations are especially relevant for companies in the top half of our WINS framework (the WINS work explained below).

Our 7 recommendations are:

If you are in production with OpenAI through Azure and trust Microsoft, monitor the situation from OpenAI but understand that new risk has been added to your deployments.

If you are in production through contracting with OpenAI directly, move to Azure to reduce continuity risk.

If you are considering switching away from OpenAI, look first at your existing cloud provider. Understand that OpenAI ChatGPT has unique features that are highly valued by some (JSON output, well-tested API, multimodal capabilities (text and image output), best performance, etc.) so there’s a clear trade off between features and risk.

If you are in a sales cycle with OpenAI, secure bids from your existing cloud providers, all of whom are likely pricing aggressively.

Invest in an “experimental line” or staging environment alongside your LLM production system to test alternative LLMs. Test and document LLM switchability, don’t just architect it in as we expect more LLM innovation over next 36 month.

Maintain a comprehensive prompt library and testing results by use case for output comparability when evaluating different LLMs.

Recognize that your organization has dozens of LLM needs and that many solutions exist. Investigate LLM solutions from incumbent vendors like SalesForce or SAP and low-cost, no-code solutions like CustomGPT for some use cases.

“WINS” work explained. The term “knowledge work” is too broad. We define “WINS Work” as tasks, functions, or possibly an entire company or industry that depends on the manipulation and interpretation of Words, Images, Numbers, and Sounds (WINS). Heart surgeons, chefs, farmers and car mechanics are knowledge workers, but not WINS workers since they there is a large physical component of value creation that can’t yet be easily automated. Software programmers, accountants, and marketing professionals are WINS workers, as the value from their tasks or functions is mainly driven manipulating words, images, numbers and sounds and rarely has a physical component.

Industries and companies with a high percentage of WINS work that is already digitized face unprecedented opportunities and competitive threats because of GenAI. We classify them as “Holding a Lever” or “In the Crucible”.

Read a free excerpt from our Corporate Buyers' Guide to LLMs.

The report will save you and your team at least 40 hours in research time (a $8K value), reduce deployment risk, and shorten time-to-value. It’s available with memberships that range from $2K-$45K.

We've already received many public endorsements about the Buyers' Guide.

Learn more about the value of investing in a membership to receive the report and frequent updates to keep you informed in this fast-changing LLM vendor market.

Some early results from our GenAI Adoption Challenges Survey:

Take the 6 min survey here and receive summarized results to benchmark your GenAI investments.

Confused by Open Source vs. Proprietary LLMs? Register for a free, online session on Friday Dec 1 at noon EST to discuss the 12 issues to consider when deciding between these two technical approaches.

Essential and Important GenAI news for week ending Nov 24 and why

Each weekday our AI Analysts meet and rate enterprise GenAI news as (E) essential, (I) important, or (O) optional for the AI Leader and investor.

The following stories last week were rated E or I and the bullets are the rationale.

ESSENTIAL

Sam Altman to Return as CEO of OpenAI

Sam Altman’s return as CEO of OpenAI provides much needed stability to OpenAI and its partners and puts a stop to all the conjectures about future of OpenAI and AGI

It also consolidates Sam’s influence over the direction of OpenAI and means that OpenAI will be more commercially focused

The debacle forced Microsoft to be even more committed to supporting enterprise customers using OpenAI through Azure

64% of workers who use GenAI have passed off work as their own

Executives simply need to know this. Your employees are using these tools, regardless of employee use bans. For some executives, it is the worst of both worlds as you are living with the risk and not getting the value

Over time, this may lead to a misrepresentation of employees' actual skills and capabilities, resulting in a mismatch between job responsibilities and employee competencies, and potentially undermining the integrity and reliability of work outcomes.

FOX Sports expands Google Cloud partnership, generative AI to automate archived sports video search

This article focuses on the use of AI to improve internal employee productivity. Enterprise leaders will prioritize experimenting with AI in use cases that improve internal employee productivity before use cases that are customer facing (riskier).

This capability can be used by police, airports, other media companies, tourist locations like Disney World or concert venues, etc and shows “dialogue to date” extends past having conversations with text and PDFs.

IMPORTANT

Billionaire Group Including Eric Schmidt Building AI Research Lab In Paris

Another evidence point that Europe is in the game to develop OpenAI capabilities in Europe

Meta disbanded its Responsible AI team

Another firm signals a shift in how ethical and societal implications of AI are prioritized, potentially impacting industry standards and raising concerns about the balance between innovation and responsibility in AI development

Nvidia launches super-fast Spectrum-X Ethernet to accelerate generative AI workloads

As organizations plan for new hardware for their Gen AI implementations, it is important to understand the latest developments not only in GPUs but associated network infrastructure as well

Spectrum-X Ethernet is a network accelerator offering 1.6 times higher networking performance

Orca 2: Teaching Small Language Models How to Reason

For language models, reasoning is a big challenge along with hallucination. Solving for reasoning through distillation is helping Small Language Models perform as well as models which are 3-4 times bigger.

This means that once these small models become better, enterprises don’t need to spend millions of dollars on compute and large models from big vendors and can work with these smaller, open source models to get the same results.

Anthropic announces Claude 2.1

As OpenAI was battling for stability, Anthropic launched a new version of Claude for the upcoming AWS user conference in Vegas (note AWS is a major investor in Anthropic). The new updates around context length, accuracy and extensibility makes it more suitable for business applications.

The larger context window of 200,000 tokens, surpassing OpenAI's 128,000, allows for bigger documents.

Nvidia’s revenue triples as AI chip boom continues

The high demand for Nvidia’s GPUs has resulted in a revenue growth of 206% year over year during the quarter ending Oct 29.

As organizations plan for their GenAI deployments for next year, they need to factor in the large supply gap for Nvidia’s GPU

Google Bard can now understand complex YouTube videos

This shows the ability to get value from video at scale, so value can come from meeting, technical demonstrations, etc.

Our weekly Learning Lab on Monday at 7p ET / 4p PT remain a wonderful sharing and learning experience. Join the 50-70 enterprise GenAI enthusiasts who attend each week to learn and discuss enterprise GenAI.

“Life is very short and anxious for those who forget the past, neglect the present, and fear the future.” — Seneca

Onward,

Paul